My last blog entry talked about a database I was dealing with that consisted entirely of indecipherably-named heaps with multiple-column logical keys.

This time I have a database that is not normalized and has just plain bad data in it that’s got to be fixed. It was easy to fix, though, so this post is not rocket science, but I figured I’d do a quick write-up about it anyway.

Let me give you an example of what I was dealing with using data from good old AdventureWorks.

The following builds a temporary table consisting of the total sales grouped by Product, Customer, and SalesPerson. Only Products that are categorized as Clothing are used to populate the table, just to keep it relatively small for this example.

if object_id('tempdb..#ProdSalesInfo','U') is not null drop table #ProdSalesInfoNotice that I included the Product’s Name in this table also. This was similar to the table that I was working with. There was no “master” table of Products… no table that I could JOIN with to acquire the Name of the Product… all the Product Names were only in this statistical table… duplicated over and over as you can see:

go

select p.ProductID

,p.Name

,h.CustomerID

,h.SalesPersonID

,sum(d.LineTotal) as Dollars

into #ProdSalesInfo

from Production.Product p

join Production.ProductSubcategory c on p.ProductSubcategoryID=c.ProductSubcategoryID

join Sales.SalesOrderDetail d on p.ProductID=d.ProductID

join Sales.SalesOrderHeader h on d.SalesOrderID=h.SalesOrderID

where c.ProductCategoryID=3 /* Clothing Items */

and h.SalesPersonID is not null

group by p.ProductID

,p.Name

,h.CustomerID

,h.SalesPersonID

;

/*

(4716 row(s) affected)

*/

select *So, for example, there are 70 entries in the table for ProductID 709, and all of them have the same description duplicated over and over for each of those entries. We’re in Non-normalized Territory.

from #ProdSalesInfo

order by ProductID

;

/*

ProductID Name CustomerID SalesPersonID Dollars

--------- ------------------------------- ---------- ------------- ----------

709 Mountain Bike Socks, M 11 282 34.200000

709 Mountain Bike Socks, M 17 275 102.600000

709 Mountain Bike Socks, M 18 275 45.600000

709 Mountain Bike Socks, M 20 283 110.397600

. . .

857 Men's Bib-Shorts, L 184 277 431.952000

857 Men's Bib-Shorts, L 197 275 53.994000

857 Men's Bib-Shorts, L 203 281 107.988000

. . .

884 Short-Sleeve Classic Jersey, XL 688 290 323.940000

884 Short-Sleeve Classic Jersey, XL 692 287 388.728000

884 Short-Sleeve Classic Jersey, XL 695 275 485.910000

884 Short-Sleeve Classic Jersey, XL 700 279 726.295076

(4716 rows total)

*/

However, it was worse than this.

Most of the names for a given ProductID were consistent, but there were inconsistent names scattered here and there for most of the ProductIDs.

Let’s duplicate that situation by altering the names of a random 3% sampling of all the entries in the table:

with cte asNow when we take a look at the table’s contents, we can see that there are junk names scattered randomly throughout (your results will differ):

(

select Name

,abs(checksum(newid()))%100 as RandUpTo100

from #ProdSalesInfo

)

update cte

set Name=Name+'-JUNK'

where RandUpTo100<=3 /* 3% of the items */

;

/*

(174 row(s) affected)

*/

select *A handful of the Products don’t have any inconsistent names at all, but rather have a uniform name for each of their entries:

from #ProdSalesInfo

order by ProductID

;

/*

ProductID Name CustomerID SalesPersonID Dollars

--------- ------------------------------------ ---------- ------------- ----------

709 Mountain Bike Socks, M-JUNK 11 282 34.200000

709 Mountain Bike Socks, M-JUNK 17 275 102.600000

709 Mountain Bike Socks, M 18 275 45.600000

709 Mountain Bike Socks, M 20 283 110.397600

. . .

857 Men's Bib-Shorts, L 184 277 431.952000

857 Men's Bib-Shorts, L-JUNK 197 275 53.994000

857 Men's Bib-Shorts, L 203 281 107.988000

. . .

884 Short-Sleeve Classic Jersey, XL 688 290 323.940000

884 Short-Sleeve Classic Jersey, XL-JUNK 692 287 388.728000

884 Short-Sleeve Classic Jersey, XL 695 275 485.910000

884 Short-Sleeve Classic Jersey, XL 700 279 726.295076

(4716 rows total)

*/

select ProductIDBut most of the Products have more than one name:

from #ProdSalesInfo

group by ProductID

having count(distinct Name)=1

;

/*

ProductID

---------

710

866

*/

select ProductIDWe want to fix the data in this table so that each of the Products has one and only one name. For any given ProductID, the name that has the most entries is the “master”… it is the “Good” Name… and all other names for the ProductID should be overwritten with that Good Name.

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

;

/*

ProductID

---------

709

712

714

. . .

881

883

884

(30 rows total)

*/

As I mentioned previously, this is easy to do, as long as you attack the problem one step at a time.

Let’s take a look at our problem Products (those with more than one name) and see how many entries there are for each of their names:

with ProblemProds asI sorted the output by ProductID and then in descending order of NumEntries. This way the name with the most entries will be output first for each ProductID… those are our Good Names.

(

select ProductID

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

)

select ProductID

,Name

,count(*) as NumEntries

from #ProdSalesInfo

where ProductID in (select ProductID from ProblemProds)

group by ProductID

,Name

order by ProductID

,NumEntries desc

;

/*

ProductID Name NumEntries

--------- ------------------------------------ ----------

709 Mountain Bike Socks, M 69

709 Mountain Bike Socks, M-JUNK 4

712 AWC Logo Cap 337

712 AWC Logo Cap-JUNK 11

714 Long-Sleeve Logo Jersey, M 254

714 Long-Sleeve Logo Jersey, M-JUNK 8

. . .

881 Short-Sleeve Classic Jersey, S 131

881 Short-Sleeve Classic Jersey, S-JUNK 4

883 Short-Sleeve Classic Jersey, L 173

883 Short-Sleeve Classic Jersey, L-JUNK 9

884 Short-Sleeve Classic Jersey, XL 177

884 Short-Sleeve Classic Jersey, XL-JUNK 4

(60 rows total)

*/

But how do we filter out those Good Names within a query?

It’s the good old Window Functions to the rescue. We can use the ROW_NUMBER() ranking function to assign a row number to our rows. For each ProductID (PARTITION BY ProductID), we want to assign a row number (starting with 1) to the rows in descending order of the number of entries for the Name (ORDER BY COUNT(*) DESC):

with ProblemProds asBy applying this ROW_NUMBER() ranking function to the query, we can see that all the rows with a value of 1 are the names with the most occurrences… those are our Good Names that we can use to overwrite all the Bad Names.

(

select ProductID

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

)

select ProductID

,Name

,count(*) as NumEntries

,row_number() over (partition by ProductID

order by count(*) desc) as RowNum

from #ProdSalesInfo

where ProductID in (select ProductID from ProblemProds)

group by ProductID

,Name

order by ProductID

,NumEntries desc

;

/*

ProductID Name NumEntries RowNum

--------- ------------------------------------ ---------- ------

709 Mountain Bike Socks, M 69 1

709 Mountain Bike Socks, M-JUNK 4 2

712 AWC Logo Cap 337 1

712 AWC Logo Cap-JUNK 11 2

714 Long-Sleeve Logo Jersey, M 254 1

714 Long-Sleeve Logo Jersey, M-JUNK 8 2

. . .

881 Short-Sleeve Classic Jersey, S 131 1

881 Short-Sleeve Classic Jersey, S-JUNK 4 2

883 Short-Sleeve Classic Jersey, L 173 1

883 Short-Sleeve Classic Jersey, L-JUNK 9 2

884 Short-Sleeve Classic Jersey, XL 177 1

884 Short-Sleeve Classic Jersey, XL-JUNK 4 2

(60 rows total)

*/

So here are our Good Names… the ones with a row number equal to 1:

with ProblemProds asNow that we have that set of “Good” Names, we can JOIN it back to our table in order to find all the entries that have the Bad Names… in other words, those entries whose Name is not equal to the Good Name.

(

select ProductID

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

)

,ProdNameSummary as

(

select ProductID

,Name

,count(*) as NumEntries

,row_number() over (partition by ProductID

order by count(*) desc) as RowNum

from #ProdSalesInfo

where ProductID in (select ProductID from ProblemProds)

group by ProductID

,Name

)

select ProductID

,Name as GoodName

from ProdNameSummary

where RowNum=1

;

/*

ProductID GoodName

--------- -------------------------------

709 Mountain Bike Socks, M

712 AWC Logo Cap

714 Long-Sleeve Logo Jersey, M

715 Long-Sleeve Logo Jersey, L

875 Racing Socks, L

881 Short-Sleeve Classic Jersey, S

883 Short-Sleeve Classic Jersey, L

884 Short-Sleeve Classic Jersey, XL

(30 rows total)

*/

with ProblemProds asThat’s our list of the entries with the Bad Names and the Good Name that we should use to overwrite them.

(

select ProductID

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

)

,ProdNameSummary as

(

select ProductID

,Name

,count(*) as NumEntries

,row_number() over (partition by ProductID

order by count(*) desc) as RowNum

from #ProdSalesInfo

where ProductID in (select ProductID from ProblemProds)

group by ProductID

,Name

)

,GoodNames as

(

select ProductID

,Name as GoodName

from ProdNameSummary

where RowNum=1

)

select p.ProductID

,p.Name as BadName

,c.GoodName

from #ProdSalesInfo p

join GoodNames c on p.ProductID=c.ProductID

where p.Name<>GoodName

order by p.ProductID

;

/*

ProductID BadName GoodName

--------- ------------------------------------ -------------------------------

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

712 AWC Logo Cap-JUNK AWC Logo Cap

712 AWC Logo Cap-JUNK AWC Logo Cap

. . .

883 Short-Sleeve Classic Jersey, L-JUNK Short-Sleeve Classic Jersey, L

883 Short-Sleeve Classic Jersey, L-JUNK Short-Sleeve Classic Jersey, L

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

(176 rows total)

*/

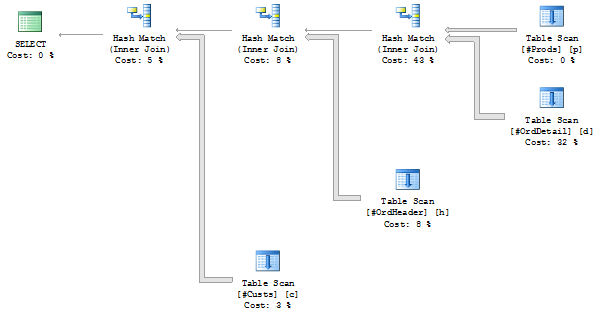

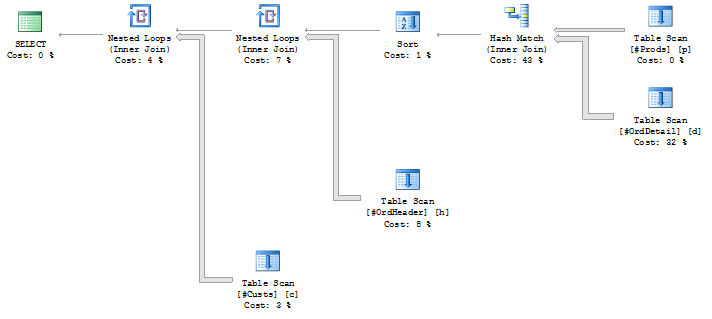

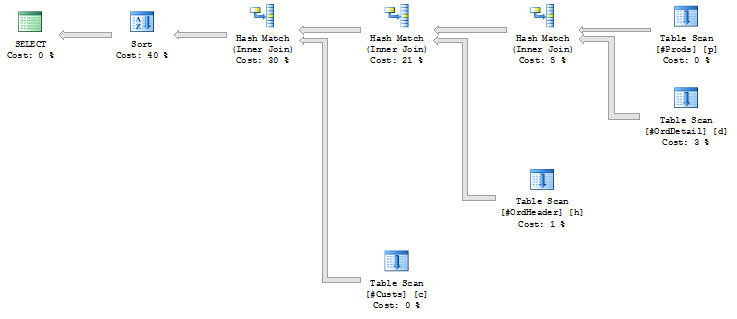

To be honest, though, it bugs me that the above query is scanning the table 3 times. There’s no compelling reason to use the ProblemProds CTE anymore. We could just perform the ROW_NUMBER() assignment to ALL products, whether they have Bad Names or not. Our two ProductIDs (710 and 866) that already have only 1 name still won’t be in the final output because the predicate of p.Name<>GoodName will filter them out anyway.

So let’s get rid of the ProblemProds CTE, and we’ll end up with the same output, but this time we will have only scanned our table 2 times instead of 3:

with ProdNameSummary asSo now it’s just a simple matter of commenting out the SELECT command (and the ORDER BY clause) and inserting an UPDATE command to fix those Bad Names:

(

select ProductID

,Name

,count(*) as NumEntries

,row_number() over (partition by ProductID

order by count(*) desc) as RowNum

from #ProdSalesInfo

group by ProductID

,Name

)

,GoodNames as

(

select ProductID

,Name as GoodName

from ProdNameSummary

where RowNum=1

)

select p.ProductID

,p.Name as BadName

,c.GoodName

from #ProdSalesInfo p

join GoodNames c on p.ProductID=c.ProductID

where p.Name<>GoodName

order by p.ProductID

;

/*

ProductID BadName GoodName

--------- ------------------------------------ -------------------------------

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

709 Mountain Bike Socks, M-JUNK Mountain Bike Socks, M

712 AWC Logo Cap-JUNK AWC Logo Cap

712 AWC Logo Cap-JUNK AWC Logo Cap

. . .

883 Short-Sleeve Classic Jersey, L-JUNK Short-Sleeve Classic Jersey, L

883 Short-Sleeve Classic Jersey, L-JUNK Short-Sleeve Classic Jersey, L

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

884 Short-Sleeve Classic Jersey, XL-JUNK Short-Sleeve Classic Jersey, XL

(176 rows total)

*/

with ProdNameSummary asAnd we’re done!

(

select ProductID

,Name

,count(*) as NumEntries

,row_number() over (partition by ProductID

order by count(*) desc) as RowNum

from #ProdSalesInfo

group by ProductID

,Name

)

,GoodNames as

(

select ProductID

,Name as GoodName

from ProdNameSummary

where RowNum=1

)

/*

select p.ProductID

,p.Name as BadName

,c.GoodName

*/

update p

set Name=c.GoodName

from #ProdSalesInfo p

join GoodNames c on p.ProductID=c.ProductID

where p.Name<>GoodName

/*

order by p.ProductID

*/

;

/*

(176 row(s) affected)

*/

Let’s check our work to make sure that there are no ProductIDs with more than one Name:

select ProductIDLooks like it worked.

from #ProdSalesInfo

group by ProductID

having count(distinct Name)>1

;

/*

Nothing... Nada... Zip... Empty

It's all good.

*/

Now that the original data is fixed up, our final step is to do what should have been done long ago… Create a Product “master” table:

select distinct ProductIDAnd then hunt down any code that relies on the Name column in #ProdSalesInfo… something like the following is a good start. If you’re wondering about the ObjDefLink column, please see my blog post called Hyperlinks To T-SQL Code for an explanation.

,Name

into #ProdMaster

from #ProdSalesInfo

;

/*

(32 row(s) affected)

*/

alter table #ProdMaster add constraint PK_ProdMaster primary key (ProductID)

select ObjType=type_descAnd any external application code that does direct querying of the database and makes use of the #ProdSalesInfo.Name column would have to be sought out also.

,ObjName=schema_name(schema_id)+'.'+name

,ObjDefLink

from sys.objects

cross apply (select ObjDef=object_definition(object_id)) F1

cross apply (select ObjDefLink=(select [processing-instruction(q)]=ObjDef

for xml path(''),type)) F2

where type in ('P' /* Procedures */

,'V' /* Views */

,'TR' /* Triggers */

,'FN','IF','TF' /* Functions */

)

and ObjDef like '%#ProdSalesInfo%' /* String to search for */

and ObjDef like '%Name%' /* Additional string to search for */

order by charindex('F',type) desc /* Group the functions together */

,ObjType

,ObjName

Once we find all those, then we can phase out the use of the #ProdSalesInfo.Name column and instead use the Name column in our new #ProdMaster table via a JOIN.

Finally, we can sleep easier at night, knowing the database is in a more normalized state.